On “MAGICAL” benchmark

Published:

Hi there, I’m Giovanni and this is my very first blog post in my personal site. I’m studying CS at the University of Padova and I’m doing my M.Sc thesis on how to make a neural network understand abstract reasoning. This is leading me to read plentiful of articles about this topic and, moreover, discovering datasets that try to tackle the task.

Today I’m going to present you “The MAGICAL Benchmark for Robust Imitation” by S. Toyer et al. (2020), and I’ll try to correlate it to another paper I read recently about “Shortcut Learning in Deep Neural Networks” by Toyer et al.

The problem of abstract reasoning

What is the abstract reasoning

What is the abstract reasoning? This task may be difficult to tackle, however, we can summarize it in two main abilities:

- Understand real concepts that are not directly tied to concrete physical objects or experiences;

- Absorb information from senses and make connections to the wider world.

For example, we may think about how to tear down a project into small chronologically ordered pieces - and this would be a concrete task, but we should be able to abstract why it is important to do so.

The world is full of abstract reasonings - and humans are really good ad it. One example is irony: the ability to see the world, recognize the discrepancies and make jokes about them. One more example is the famous “Eureka!”, which it is told that Pitagora shouted when he understood how to measure the volume of an object with water. He observed the water falling down from his bath while dipping an object. Then, he linked those two concrete observations and made an abstract one: the volume of the outside water is the same of the object inside the bath.

Another useful example of abstract reasoning is the concept of monotonicity: it can be applied to functions, but also to colors (looking, for example, to the wavelength of the reflected light), to sounds, to economical trends, and more.

![]()

Are neural network capable of abstract reasoning?

In the last decade we’ve seen how neural network are performing progressively better - even than humans themselves, under many aspects: super-human performances at recognizing images, super-human performances at many table games like Go, Chess and StarCraft and more.

However, are they really able to abstract the meaning of the game, the images, the contents that they analyze?

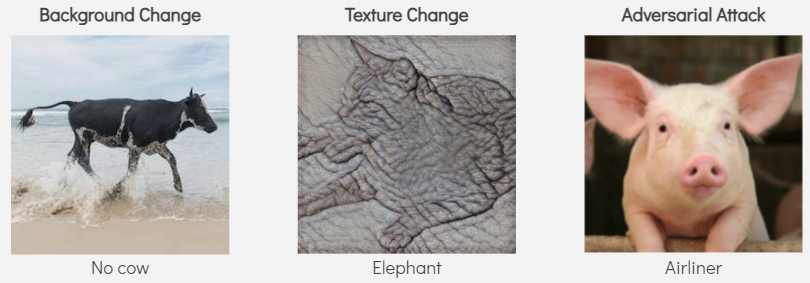

Actually, nope. It has been that a slightly change in background, texture or invisible gamma changes are sufficient to completely gamble the perception of a super-advanced neural network. We can say that they are missing higher level information about target, that they can’t abstract an idea… Of a cow.